Best Practices for Reproducible Science: Lessons from 2400+ Papers

2025-04-10

Who am I?

Lars Vilhuber

Executive Director of the Labor Dynamics Institute and Senior Research Associate in the Economics Department at Cornell University, and the American Economic Association’s Data Editor.

Data Editor of the AEA

2389 Manuscripts and 4440 Reports, approx. 4400 authors reached.

Best practices?

First: why?

Why reproducibility?

- Credibility

- Transparency (openness)

- Efficiency of scholarly discourse?

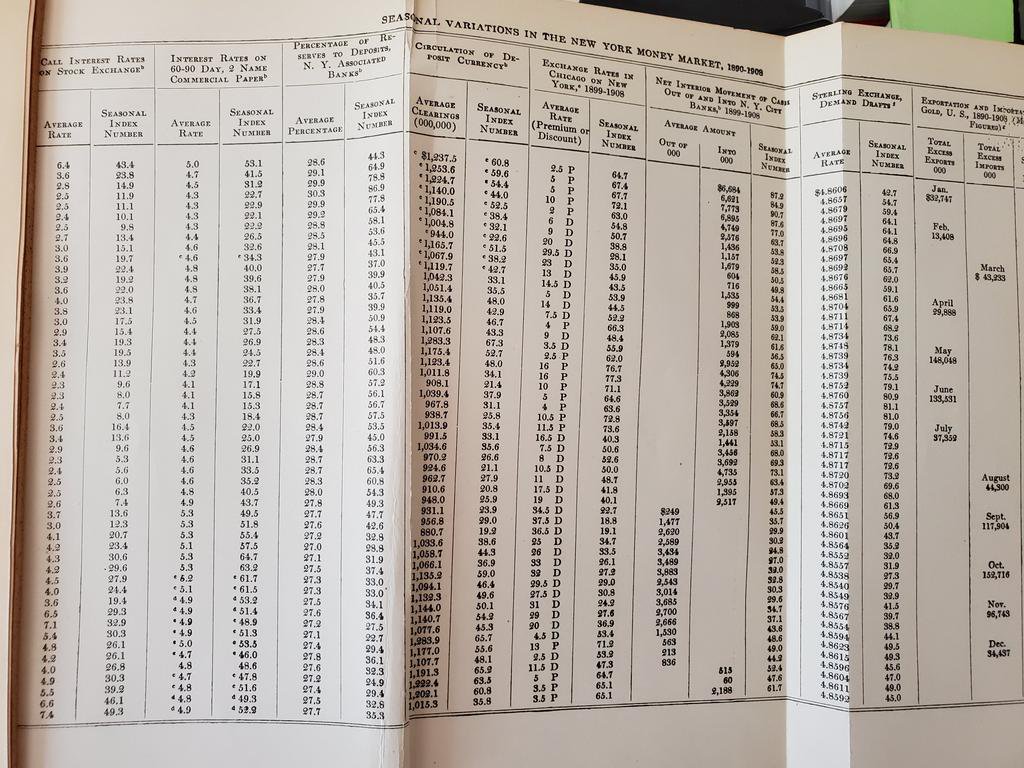

Why reproducibility?

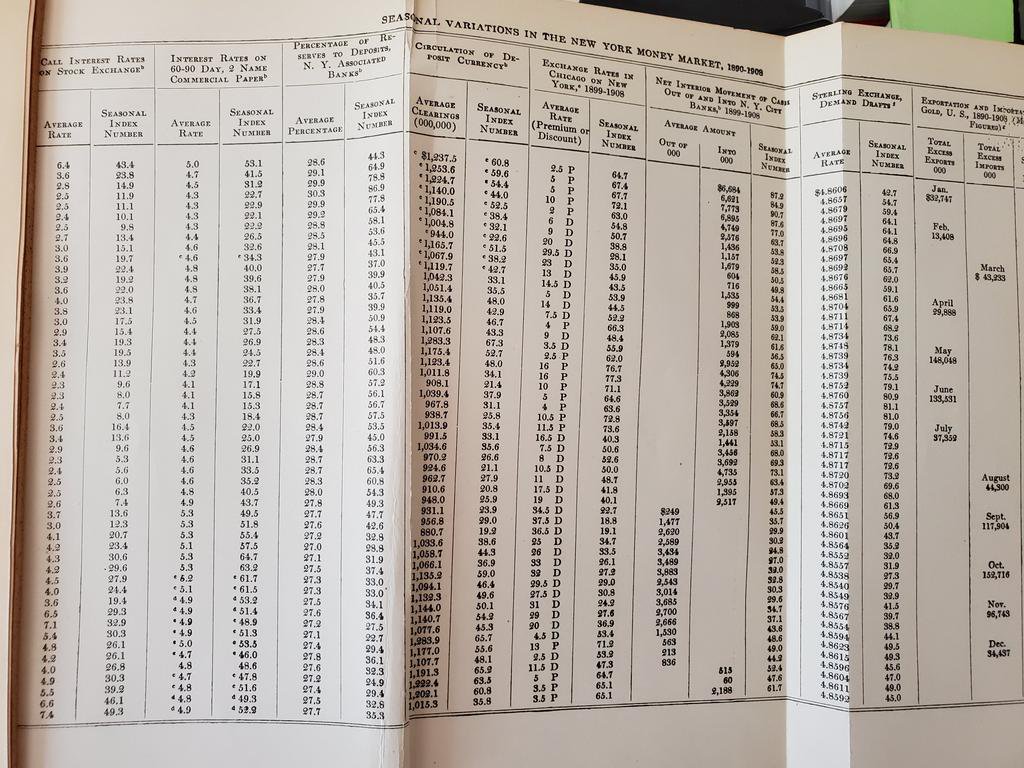

- Early publications (20th century) contained tables of data, and the math was simple (maybe)

- Data became electronic, was no longer included or cited

- Math was transcribed to code, and was no longer included

Increasing broad consensus in academia

- FAIR principles

- Data Citation Principles

- Computational Reproducibility

FAIR Principles

FAIR:

- Findable

- Accessible

- Interoperable

- Reusable

Data Citation Principles

What is…

What is a replication package?

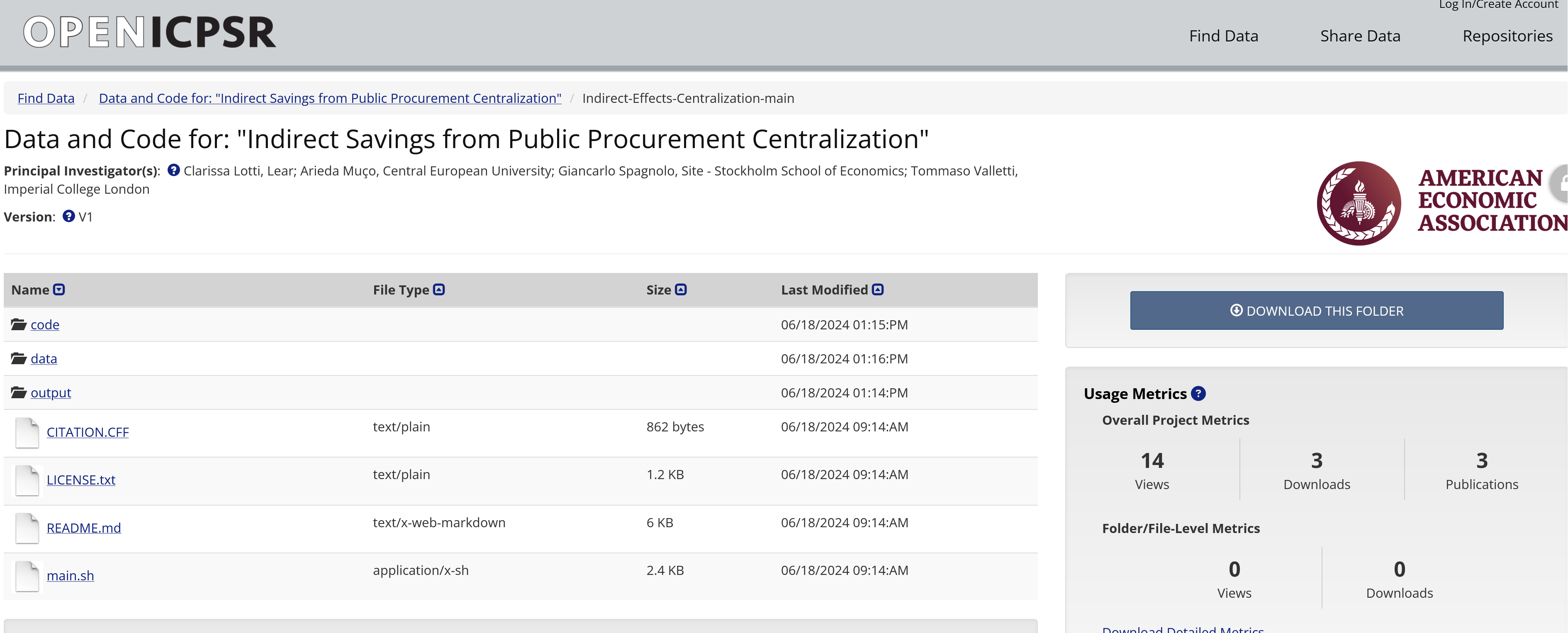

A Replication Package is

- Code

- Data

- Materials (for surveys, experiments, …)

- Instructions on how to obtain data not included

- Instructions on how to combine it all

- Known issues documented

Complies with…

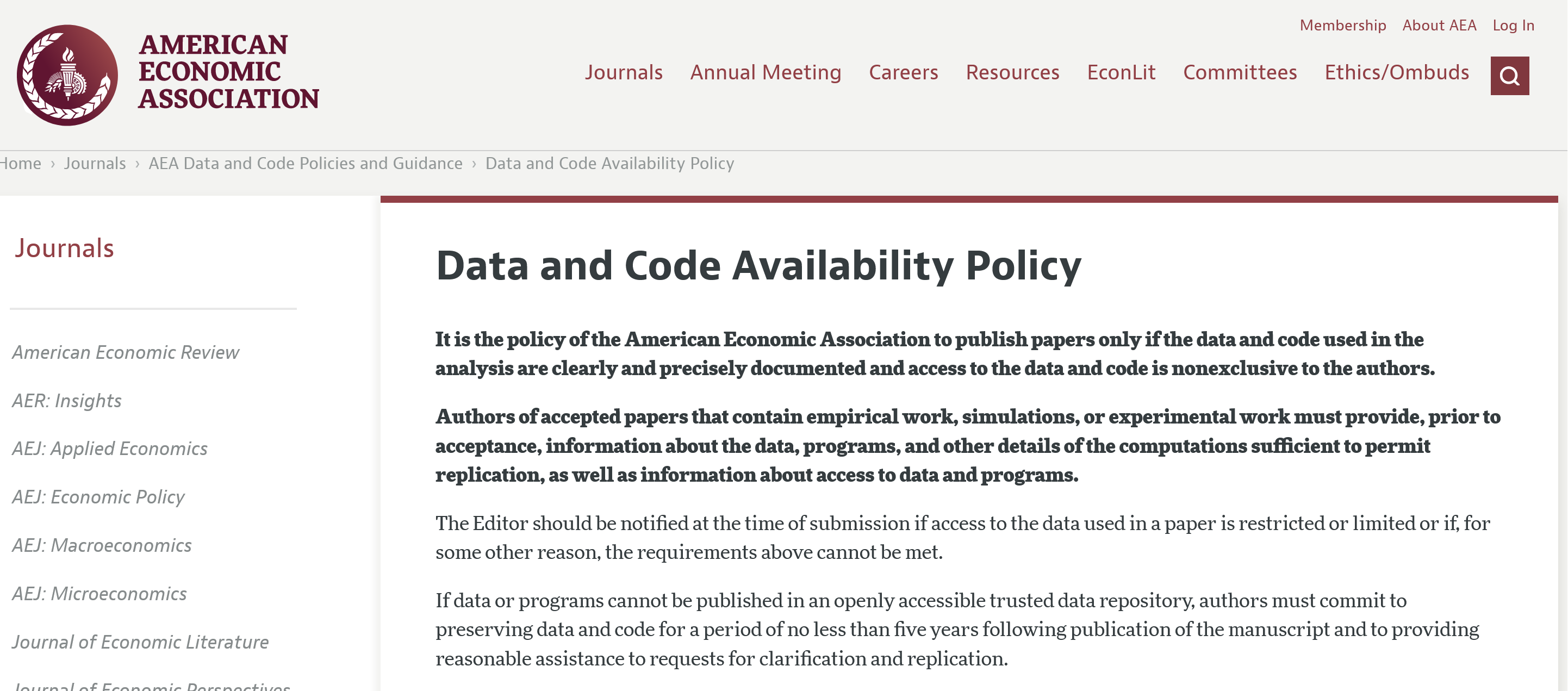

AEA policy

Is stored in…

- AEA Data and Code Repository

- Other trusted repositories

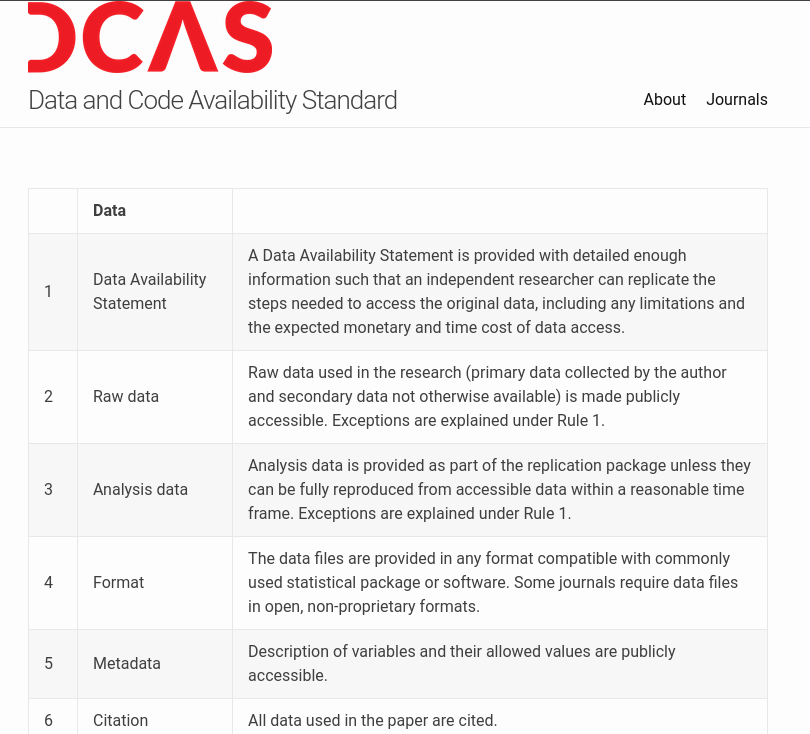

Tenets of the Policy

- Transparency

- Completeness

- Preservation

Best practices?

Summing up

- Why

- Credibility

- Transparency (openness)

- Efficiency of scholarly discourse ([example])

- How

- FAIR principles

- Data Citation Principles

- Computational Reproducibility

- As Replication Packages

- Code

- Data

- Materials (for surveys, experiments, …)

- Instructions on how to obtain data not included

- Instructions on how to combine it all

- Known issues documented

Who?

Who?

- 🐇 Authors at conditional acceptance

- 🐢 Authors at submission

- 🐁 Authors at beginning of project

- 👴🏻👵🏽 Experienced researchers

- 👶🏽👶🏻 Junior researchers

- 👨🎓👩🎓 Ph.D. students

- 🧒👦 Undergraduates

How?

How to create reproducible research?

Habits

- Reproducibility from Day 1

- Adopt reproducible habits

- Take notes when you do things, not after

- Use version control

Tools

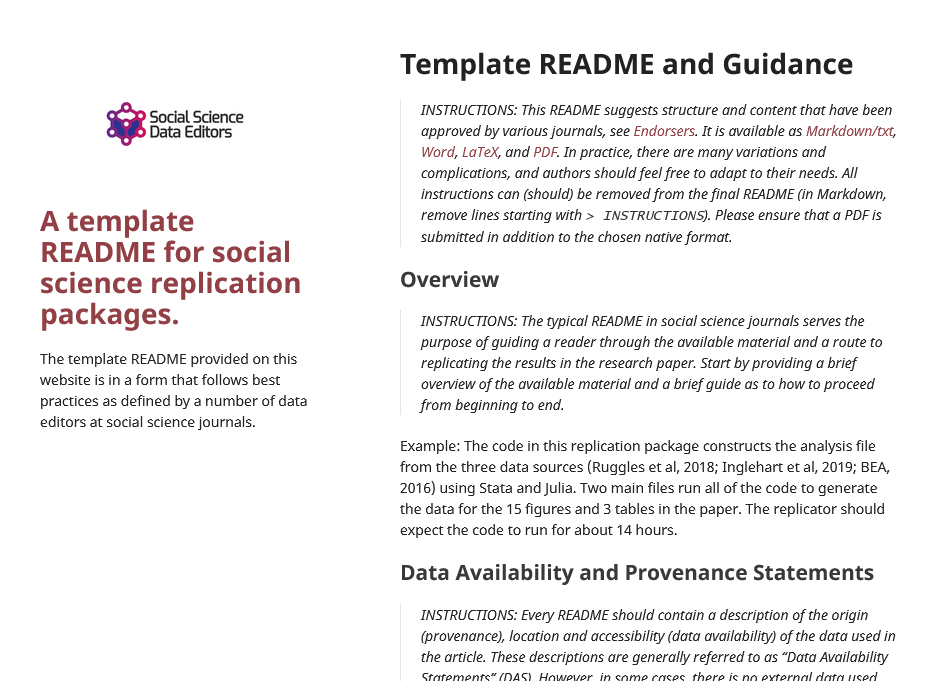

- Use Template README

- Learn programming tricks that help streamline processing

- Save files and tables programmatically

Strategy

Computational empathy: think of the next person to run this - It could be you in 5 years!

Where to?

Choices

- Issue No. 1: Data Access and preservation 💥 [a]

- Issue No. 2: Confidential data - same ⬆️! [a] [b]

- Lifecycle checking: Self-checking reproducibility and presentation

- New challenges: AI and Big Data but same ⬆️!

- New methods: Transparency outsourced or certified

- Implementation in academia: Students!

Any further thoughts?

Thank you

Appendix

Resources

README

Lars Vilhuber, Connolly, M., Koren, M., Llull, J., & Morrow, P. (2022). A template README for social science replication packages (v1.1). Social Science Data Editors. https://doi.org/10.5281/zenodo.7293838

You can download the Word, LaTeX, or Markdown version of the README with lots of examples.

Other guidance

Presentation on “Self Checking Reproducibility” and its associated website

Guidance when (some) data are confidential: https://labordynamicsinstitute.github.io/reproducibility-confidential/

Guidance for citations: https://social-science-data-editors.github.io/guidance/addtl-data-citation-guidance.html

Extra info

- This document’s source: https://github.com/larsvilhuber/yale-seminar-2025

- Licensed under

![CC BY-NC 4.0]()

Sources

- Images: NYT, Bluesky 1, 2, Ike Hayman/Wikimedia

Details on Transparency, etc.

Transparency

- Provenance of the data

- Processing of the data, from raw data to results (code)

It is the policy of the American Economic Association to publish papers only if the data used in the analysis are clearly and precisely documented and access to the data and code is clearly and precisely documented and is non-exclusive to the authors.

Completeness

- All data needs to be identified and and access described

- All code needs to be described and provided

- All materials must be provided (survey forms, etc.)

Authors … must provide, prior to acceptance, the data, programs, and other details of the computations sufficient to permit replication

Preservation

- All data needs to be preserved for future replicators

- Ideally, within the replication package, subject to ToU, for convenience

- Otherwise, in a trusted repository

Preservation

- Code must be in a trusted repository

- Usually, within the replication package

- Websites, Github, are not acceptable

Historically

AER 1911 thanks to Stefano Dellavigna

Modern preservation

Exceptions to the Policy

None

…

… there is a grey zone:

- When data do not belong to researcher, no control over preservation, access!

- Sometimes, ToU prevent researcher from revealing metadata (name of company, location)

Transparency again

- However:

- No exception for need to describe access (own and other)

- No exception for need to fully describe processing (possibly with redacted code)

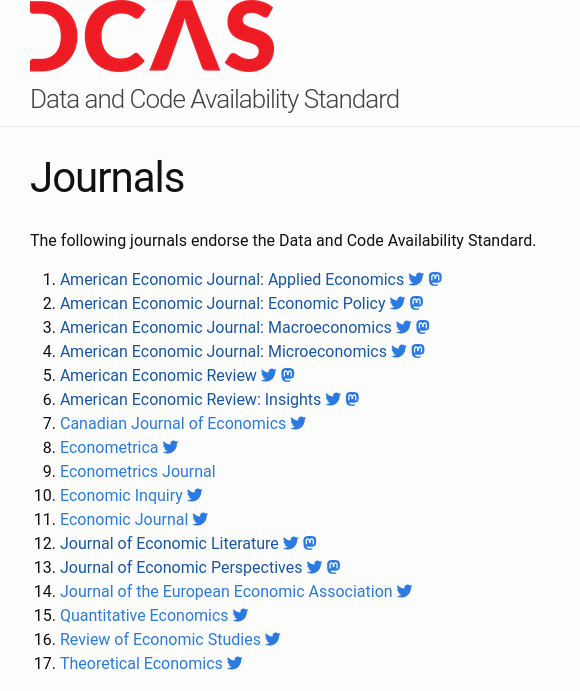

Reproducibility in Economics and beyond

Data Editors

Common policies

https://social-science-data-editors.github.io/

Elsewhere: Political Science

Elsewhere: Sociology

But!

Elsewhere: Sociology

Benefits

Building on the work of others

Roth, Jonathan. 2022. “Pretest with Caution: Event-Study Estimates after Testing for Parallel Trends.” American Economic Review: Insights 4 (3): 305–22. DOI: 10.1257/aeri.20210236

Notes: “I exclude 43 papers for which data to replicate the main event-study plot were unavailable.”

Building on the work of others: dCdH 2020

de Chaisemartin, Clément, and Xavier D’Haultfœuille. 2020. “Two-Way Fixed Effects Estimators with Heterogeneous Treatment Effects.” American Economic Review 110 (9): 2964–96. DOI: 10.1257/aer.20181169

The results from various other papers are recomputed to empirically demonstrate the relevance of the proposed methods.

Transparency elsewhere

Transparency outsourced

Transparency outsourced

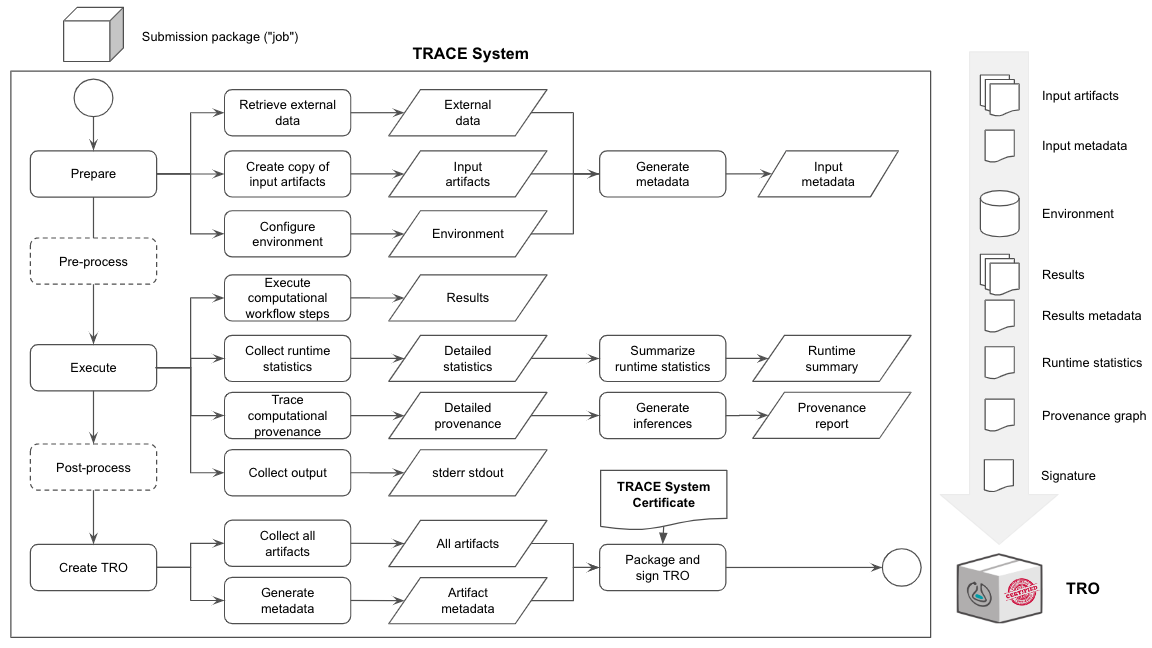

- A third party conducts the reproducibility, not you, not me.

- Need to common understanding, protocols, etc.

- AEA’s protocol

- We do this about a dozen times per year

Transparency outsourced

Why should I believe the third party?

- Trust

- Transparency

- Common methods

Transparency certified

Transparency certified

- Providing information about the computing platforms themselves, including specific details about how computational transparency is supported.

- Packaging and signing resulting artifacts along with records of their execution using a standard format.

Applications

- Limor, R-squared, cascad, World Bank!

- FSRDC? IRS?

- Meta data?

Footnotes

Data Citation Synthesis Group: Joint Declaration of Data Citation Principles. Martone M. (ed.) San Diego CA: FORCE11; 2014 https://www.force11.org/group/joint-declaration-data-citation-principles-final

Weeden, K. A. (2023). Crisis? What Crisis? Sociology’s Slow Progress Toward Scientific Transparency . Harvard Data Science Review, 5(4). https://doi.org/10.1162/99608f92.151c41e3